Recommended Audience: Anybody with an Infrastructure, vSphere, vCloud Foundation, Kubernetes, and DevOps background.

What to expect from this post: Configuring “workload management” in vSphere7 with Kubernetes.

Brief Overview:

vSphere 7 with Kubernetes is powered by VMware Cloud Foundation 4 and provide the following services:

- vSphere Pod Services: This will be the topic of our interest here in this post. It enables the developers to run containers natively and securely on vSphere without actually having to manage virtual machines or containers or K8S clusters either manually or using other automation mechanisms.

- Registry Services: Harbor is one of the services provided natively, so the developers can store and manage Docker/OCI images. We will cover a topic on Harbor in another post.

- Network Services (CNI): As covered in NSX-T Architecture and other posts, NSX-T 3.0 is tightly integrated with vSphere 7, and through Kubernetes’ CNI (Container Network Interface), developers can easily define virtual routers, load balancers (external) and firewall rules to be used in their pods/applications.

- Storage Services (CSI): vSphere along with vSAN (of course, other storage is supported) or vVOLS provide the storage policies and devices (block and file) that can be consumed as Kubernetes storage classes. It allows the developers to provide persistent volumes, configmaps, and storage classes to the virtual machines, containers and K8S clusters.

Workload Management Pre-requisites:

As outlined in the vSphere with Kubernetes Overview post, in this post, we’re going to create a Supervisor Cluster by configuring Workload Management. Here’re the prerequisites for workload management:

- vSphere Cluster configured with a valid Workload Management License key

- vSphere Cluster must have a minimum of two ESXi hosts

- vSphere Cluster configured with Fully Automated DRS

- vSphere Cluster configured with vSphere HA

- vSphere Cluster configured with a vSphere Distributed Switch 7.0

- vSphere Cluster configured with enough capacity of storage

vSphere Cluster Compatibility Verification for Workload Management:

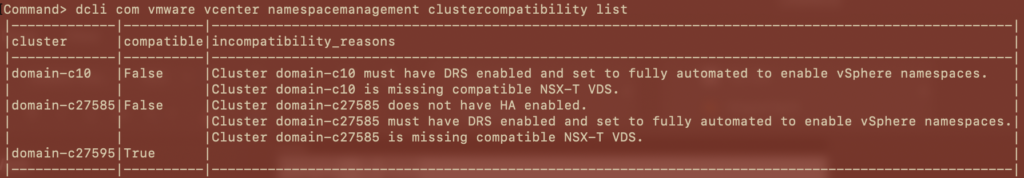

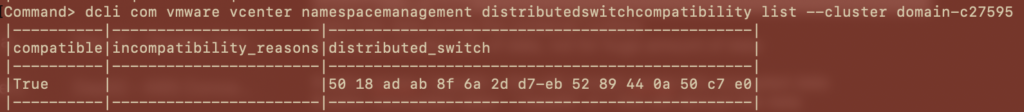

Before, configuring the workload management, you can SSH into the vCenter Server appliance and run the following commands to verify if the cluster on which you’re enabling Workload Management is compatible or not:

- Check the vSphere Cluster Compatibility > # dcli com vmware vcenter namespacemanagement clustercompatibility list

- Check the vDS Compatibility > dcli com vmware vcenter namespacemanagement distributedswitchcompatibility list –cluster domain-c27595

- Check the NSX-T Edge Node Compatibility > dcli com vmware vcenter namespacemanagement edgeclustercompatibility list –cluster domain-c27595 –distributed-switch “50 18 ad ab 8f 6a 2d d7-eb 52 89 44 0a 50 c7 e0”

Workload Management Configuration:

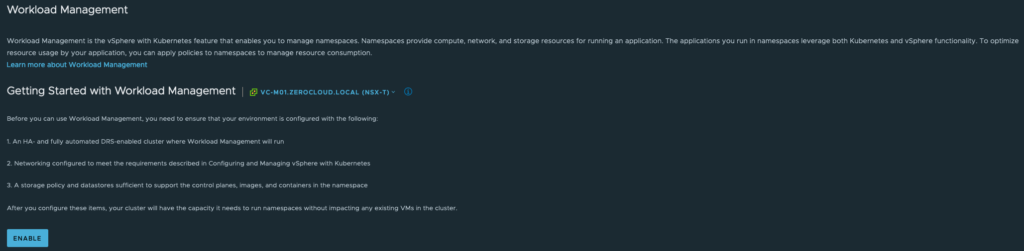

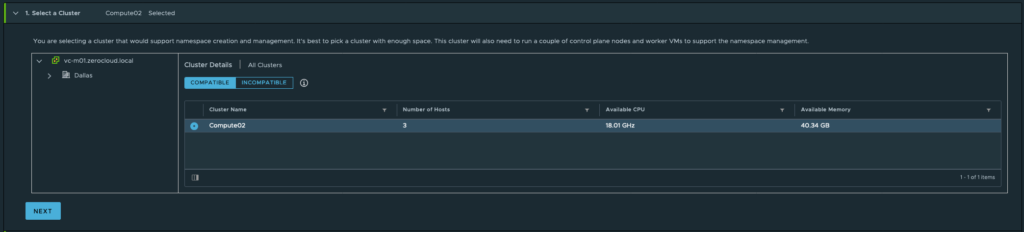

Now, that we verified that the cluster is compatible and passed all the pre-requisite tests, we’re ready to configure for Workload Management. Let’s drive right into, then.

Login to vSphere Client using administrator@vsphere.local and password. Click on Menu > Workload Management > Click on Enable.

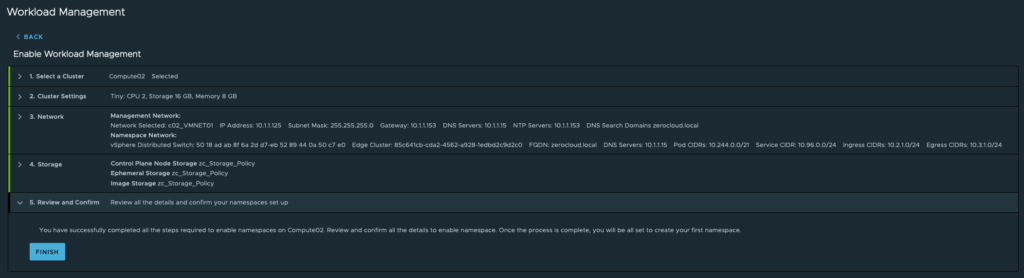

Since, we already know that the cluster is compatible, we should see it in the compatible list. Now, select the cluster and click Next.

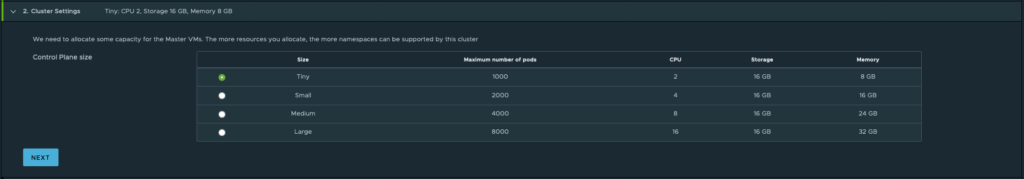

Select the size for the Master VM (this is used for the Master Node of the Supervisor Cluster). Select Tiny (in my case) and click Next.

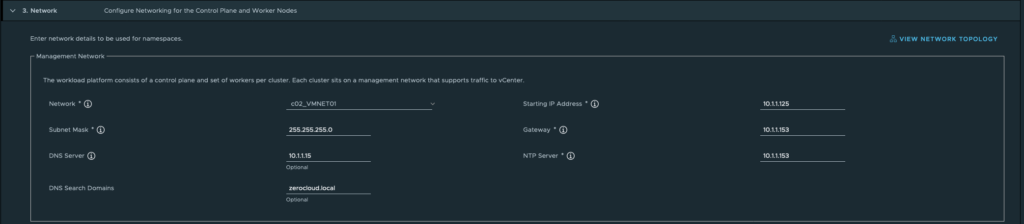

Networking is probably the complex step in this configuration. For the Management Network, select the network on which the Master Nodes will reside > provide a starting IP address, Subnet Mask, Default Gateway, DNS server, NTP Server, and the DNS search domain for the master nodes.

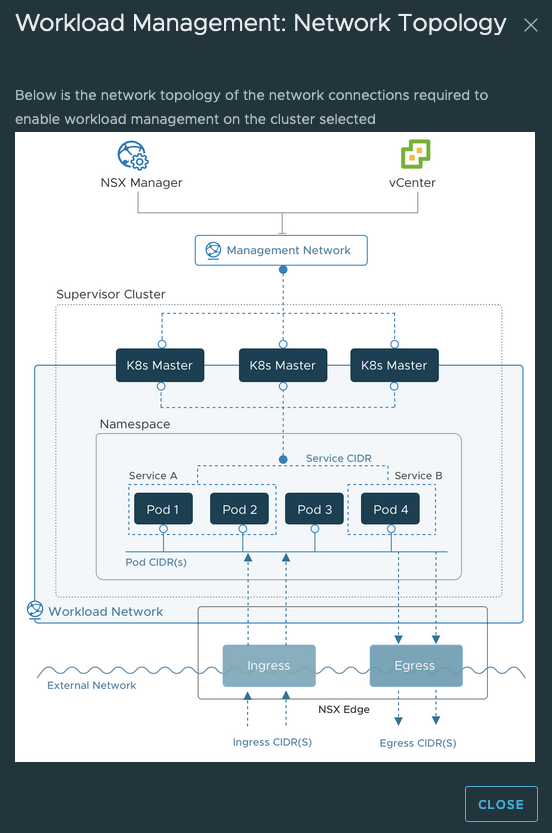

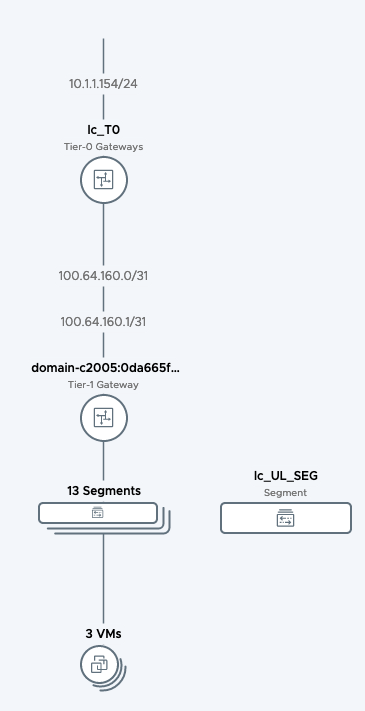

Here’s a sample Network Topology that outlines how various supervisor cluster components are configured.

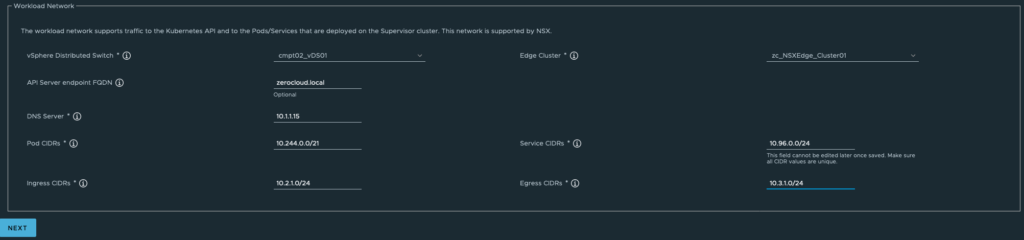

For the Workload Network > select vDS > select Edge Cluster (configured in NSX-T 3.0 Deployment for TKG ~ step06 post) > provide the domain for the API Server endpoint > provide the DNS Server > leave the CIDRs as it is for Pod CIDRs and Service CDRs > for Ingress and Egress CIDRs provide IP addresses from different subnets and these should be routable addresses > click Next.

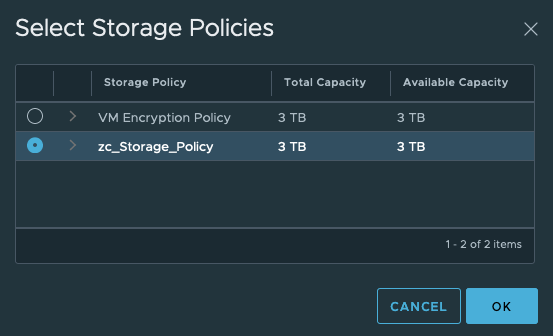

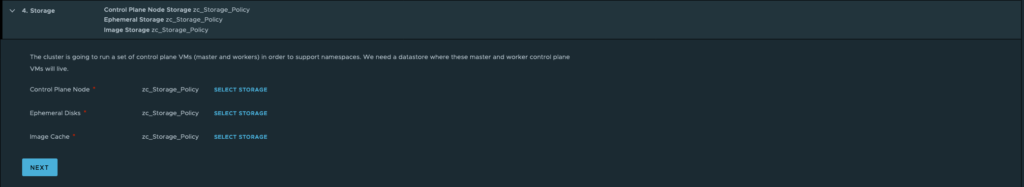

For the Storage, select the datastore for control plane nodes, ephemeral disks, and image cache, select a storage policy and click OK > click Next.

Review the settings and click Finish.

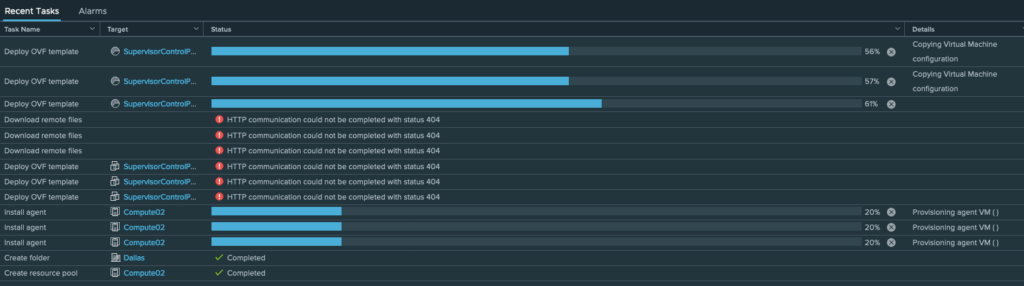

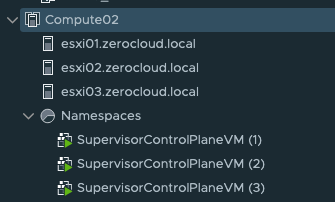

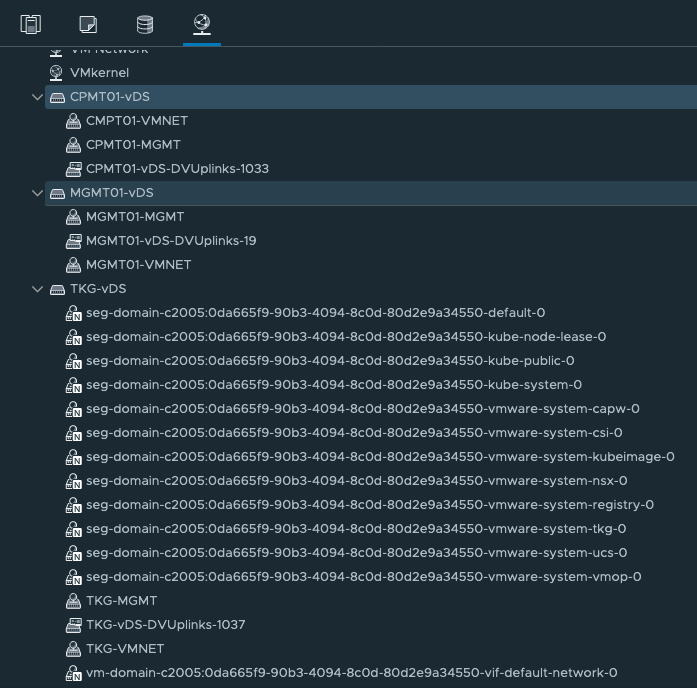

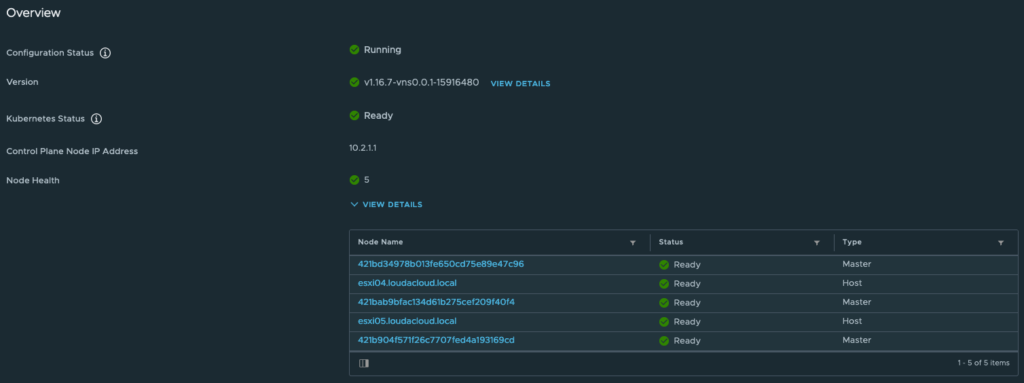

Now, you’ll see a whole lot of tasks submitted in vSphere and you should see that the Namespaces resource pool is created under Hosts and Clusters/VMs and Templates view and should also see various SupervisorControlPlaneVMs (one Master Node per ESXi Host) getting created > after the deployment is completed, the Master Node VMs gets turned on.

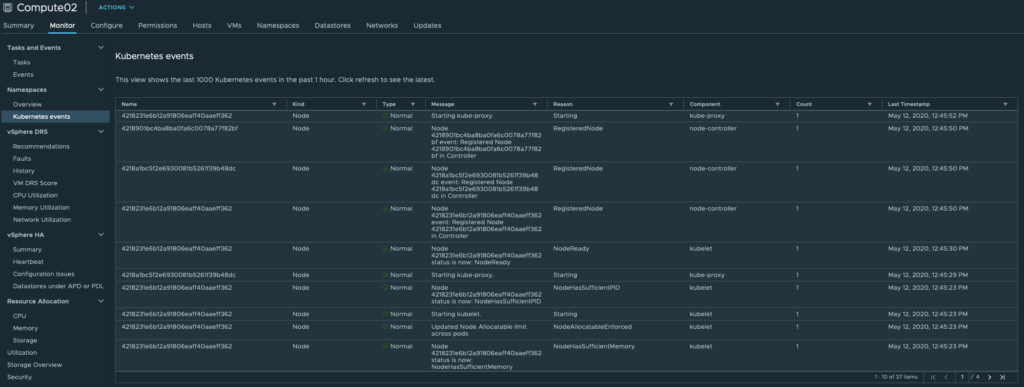

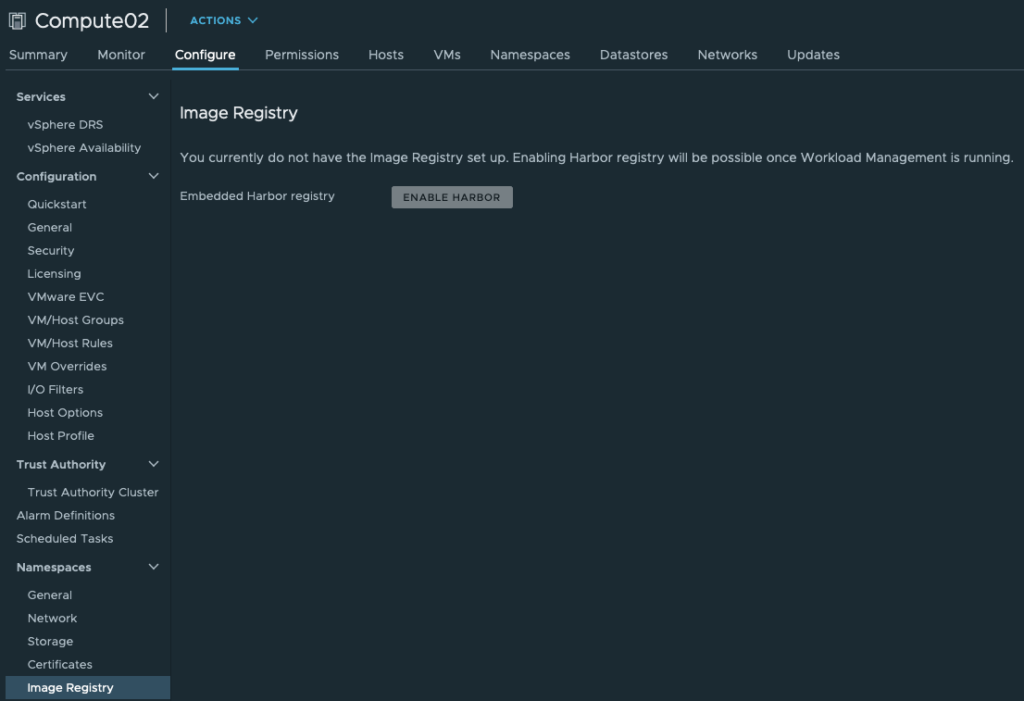

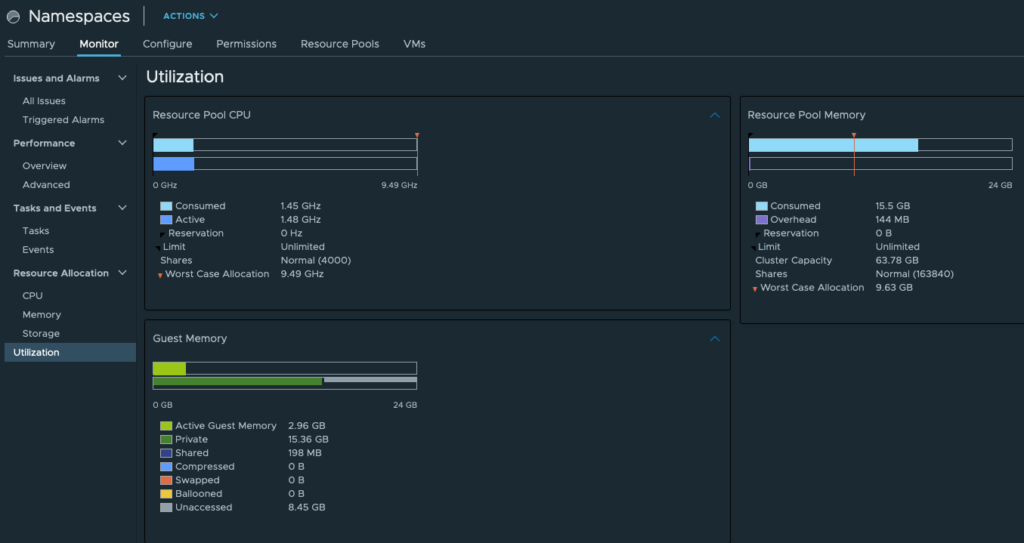

You would also observe Kubernetes events under Monitor for the vSphere Cluster > also Namespaces section under the vSphere Cluster Configuration.

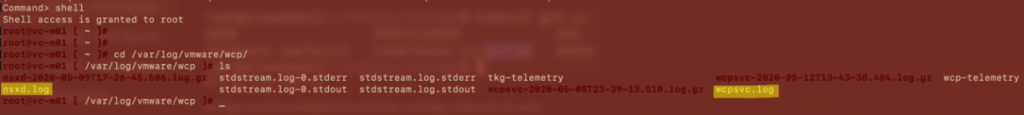

For further understanding on what’s happening during the configuration, check out the vCenter Server logs, particularly wcpsvc.log and nsxd.log.

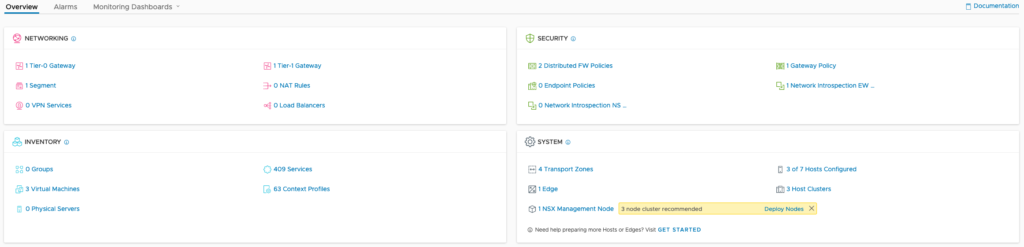

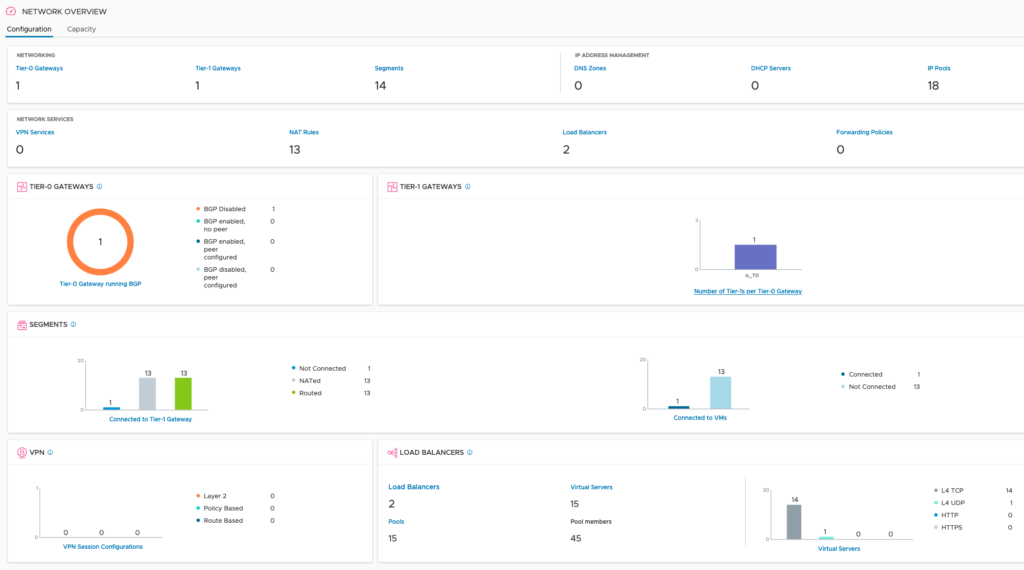

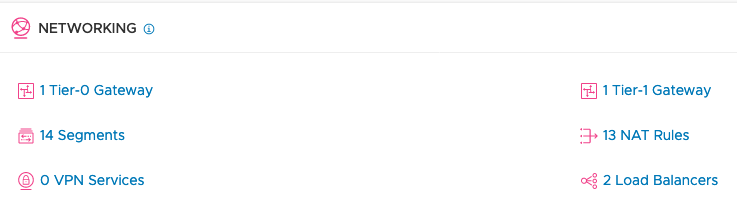

Also, observer the changes happening in the NSX-T environment, particularly see that a Tier-1 Gateway is created.

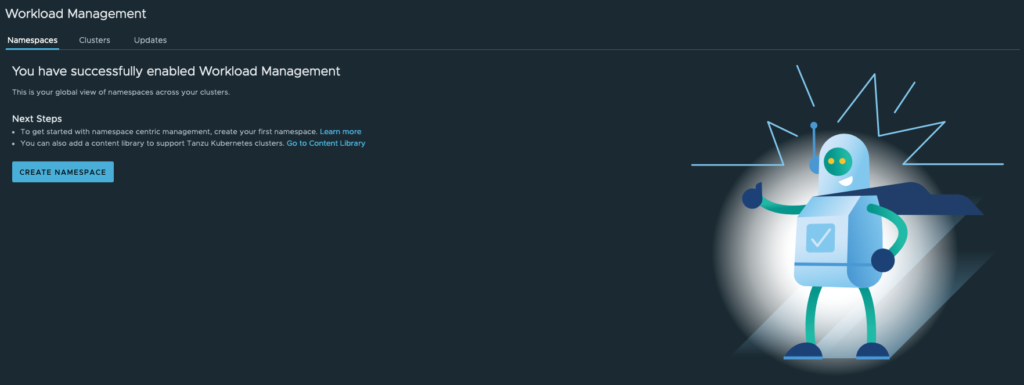

You would observe that Workload Management is in configuring state for a long time, I even had a nice dinner and came back to see the same screen. For me it took almost an hour. At this time, you’re ready to create namespaces and share them to the developers.

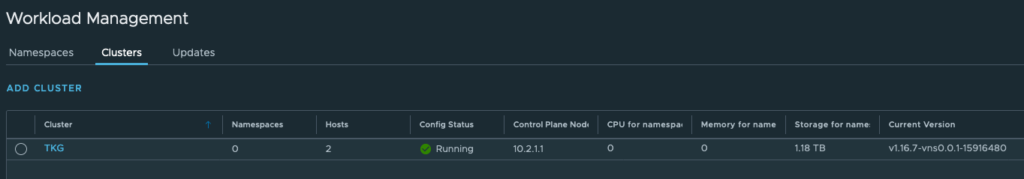

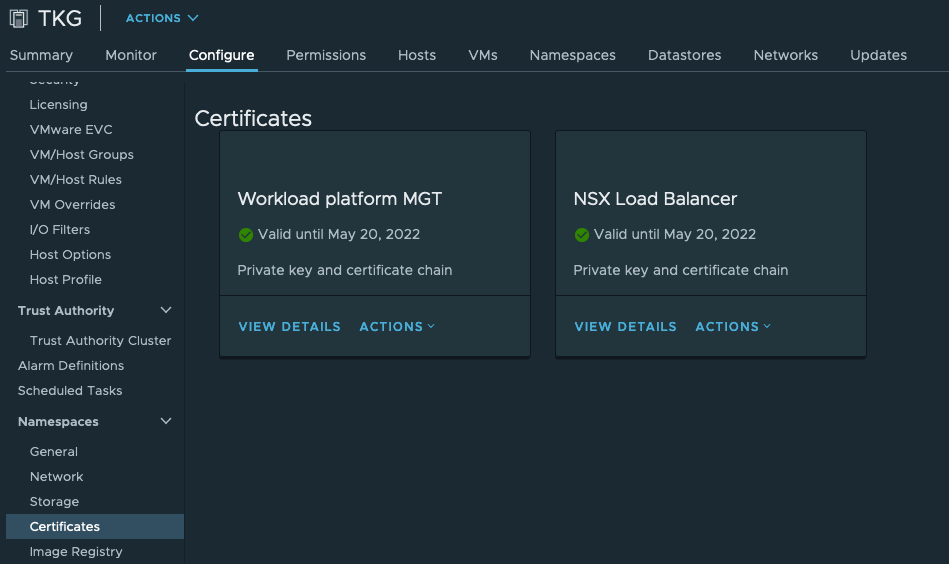

I’m adding a few more screenshots of vCenter after the successful enablement of workload management:

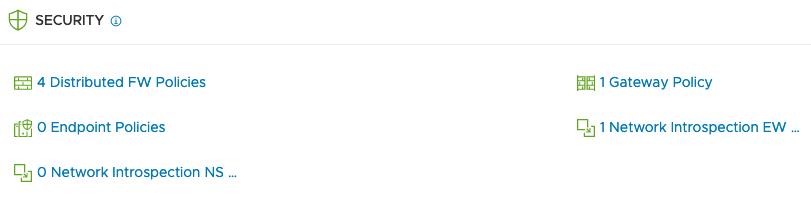

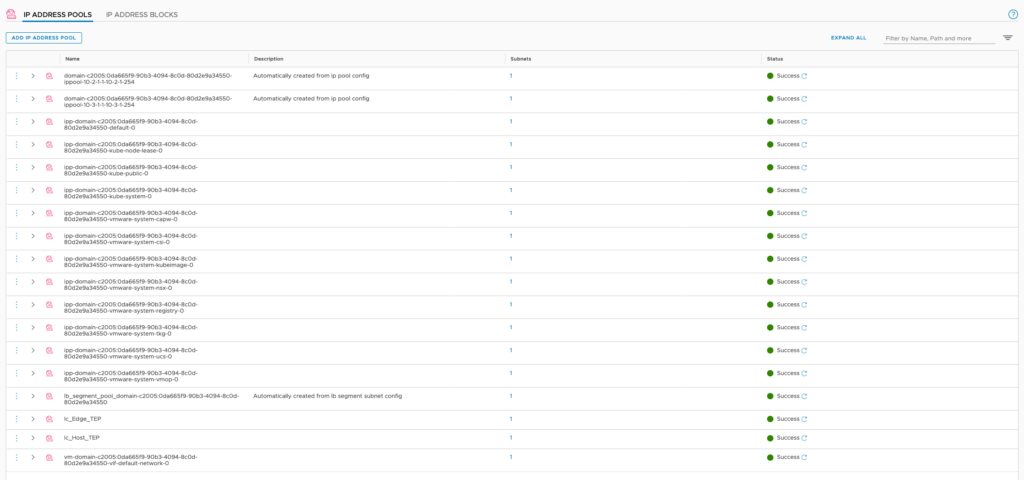

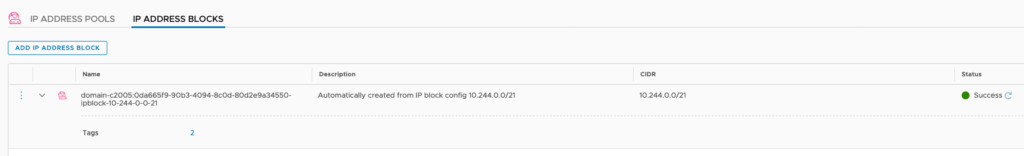

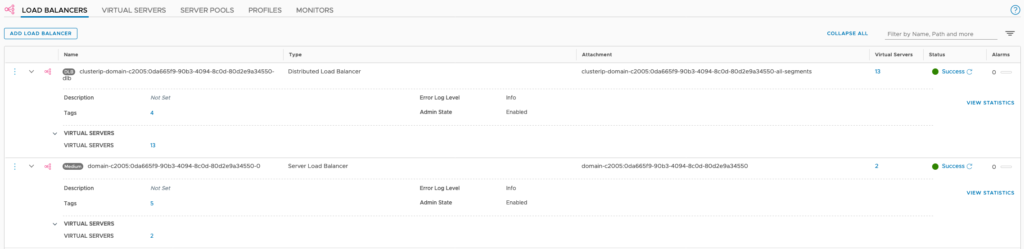

And, here I’m adding a few more screenshots of NSX-T after the successful enablement of workload management:

That wraps up this section of enabling vSphere with Kubernetes. Will meet up in the next post for more details on creating guest clusters.